Today, I’ll teach you about a profound concept – our ”cosmic endowment,“ which is the maximum amount of resources we have available to us in the Universe. (Literally, the maximum. Once we’ve exhausted this, we’re done, dead, starved, etc.)

Any discussion about our cosmic endowment requires an understanding of thermodynamics and entropy. Both are very confusing and difficult subjects. So let’s break them down…

In 1865, German physicist Rudolf Clausius invented “entropy.” Entropy is a metric that does two things for us: It measures the dispersion of energy and the amount of uncertainty in a system. The energy part has to do with thermal physics (thermodynamics). The uncertainty part has to do with statistical mechanics.

Let’s talk about energy first…

Energy

Say I have an empty inflatable pool sitting in my backyard. Say I make a plastic partition inside of this pool that separates half of the pool from the other half. Now, say I fill one of those halves with water.

What does the water want to do?

You guessed it! It wants to spread out to the rest of the pool.

Believe it or not, every single thing in the entire Universe wants to “spread out to the rest of the pool.” And once it spreads out, it stays that way unless energy is used to put it back. This is due to the “laws of thermodynamics.” They’re called laws because there are no verified violations of them in nature – as far as we know, they cannot be broken.

Here they are (from Wikipedia), translated…

First Law of Thermodynamics: When energy passes, as work, as heat, or with matter, into or out of a system, the system’s internal energy changes in accord with the law of conservation of energy.

Translation: Due to the conservation of energy law (energy can’t be created or destroyed, only transformed), energy has to come from somewhere to do work – you can’t get more energy from a system than you put into it.

Second Law of Thermodynamics: In a natural thermodynamic process, the sum of the entropies of the interacting thermodynamic systems increases.

Translation: Because of the first law, there is no way to decrease the total entropy of a system without borrowing energy from another system. Therefore, entropy can only increase overall.

Third Law of Thermodynamics: The entropy of a system approaches a constant value as its temperature approaches absolute zero. It is impossible for any process, no matter how idealized, to reduce the entropy of a system to its absolute-zero value in a finite number of operations.

Translation: Absolute zero can never be reached due to quantum effects (see “zero-point energy,” ”quantum foam,” and ”virtual particles” in my Quantum Primer). The implication of this law is the rate of entropy’s increase in the Universe can fundamentally never be completely stopped. This is actually one of the physical bases of time, where time physically cannot pass slower than this lower limit in local space. I’ll talk more about this in the next premise.

Zeroth Law of Thermodynamics: If two systems are each in thermal equilibrium with a third system, they are in thermal equilibrium with each other.

Translation: This one seems trivial, but it’s important. The law is simply a formalism that sets the foundation for thermodynamics, showing that temperature is a uniform metric that can be relied upon. It’s called the “zeroth” law because it’s the foundation the other laws rest upon and was formulated later than the other three historically (which is why I put it last here).

Systems

In thermodynamics, it’s also important to know there are three types of “systems” – closed, isolated, and open. Generally, these systems are just what they sound like:

- Isolated System: Neither matter or energy can be exchanged with other systems

- Closed System: Energy can be exchanged with other systems, but not matter

- Open System: Both energy and matter can be exchanged with other systems

There’s been a good bit of historical debate surrounding which type of system the Universe itself is. However, provided the Universe is indeed infinite, which most physicists believe it is, the Universe would be an open system (by definition, you can’t have a closed or isolated system without an “outside”). Additionally, due to the third law, there can never be a truly closed or isolated system in the Universe because there will always be a minimum fluctuation in any “wall” separating systems.

Now back to entropy and our inflatable pool…

In our example above, the water partitioned to one half of the pool had latent energy waiting to be released. Once I removed the partition, the water immediately rushed outwards spreading over the rest of the pool. The available latent energy of the partitioned water was “used up” to do work and the dispersed water is now in a state apparently less useful than it was in before. The energy wasn’t destroyed, it was simply transformed into a form less useful to us – it lost a proportional amount of latent energy as it rushed outwards, converting it to kinetic energy. The result was a pool with less available latent energy.

In a sense, what entropy does is it measures the amount of energy no longer available to do work. The more work done by a system, the less latent energy remains available, the greater the entropy! The pool gained entropy when the partition was removed. In fact, any action at all will increase the overall entropy of a system.

Make sense?

It’s a bit unintuitive initially because we’re measuring with an inversive system of measurement – entropy is measured by the energy unavailable to do work.

- Lower Entropy = More latent energy available

- Higher Entropy = Less latent energy available

Entropy increases as the potential to do stuff decreases. On a deeper level, this increase in entropy happens every time energy is transformed (or transferred) because of a phenomenon known as “waste heat.” No process can ever be 100% efficient because of the third law. Therefore, it generates waste heat. Waste heat is simply energy that is an incidental byproduct of any thermodynamic process – energy that is “lost” to it’s intended purpose. The idea of recapturing this wasted energy is central to many fields of engineering that seek to create more efficient machines and processes (where the third law of thermodynamics places upper physical limits on how efficient any system can become).

Perhaps the best way to think of entropy is energy being dispersed in the Universe. To do useful work, you need the ability to exploit differences in energy. This available useful energy is known as “thermodynamic free energy.”

Eventually, in theory, the entire Universe will reach a state of maximum entropy where there is simply no more thermodynamic free energy to be found. This is known as “heat death,” which is considered to be one of the possible eventual outcomes of our Universe. Not to worry though, this would take an incomprehensibly long time to occur – so long, in fact, that it’s pointless to write out the projected number of years because no human has any concept of that amount of time. We’re safe and sound in the present.

However, this isn’t to say entropy is guaranteed, only that it’s guaranteed everywhere.

What I mean by this is entropy is relentless on a Universal scale, but not on a local scale. For example, we could decrease the entropy of the Earth by borrowing energy from the Sun (another system) to reconfigure things here into higher energy states (we do, in fact!), but we couldn’t decrease the entropy of the entire Universe.

Uncertainty

Entropy also measures uncertainty. In particular, the number of unknown “microstates” in a system.

As we discussed previously, uncertainty is the product of fallibility and complexity, which is essentially the number of possible states a system can have. More states means more complexity and thus more uncertainty. What entropy does is it measures the uncertainty that arises from the total number of possible microstates comprising a macro system. Introducing more states means more entropy, less states means lower entropy, everything else equal.

For example, let’s say we have two tabletop puzzle boards: On the one side, we have a small puzzle with 20 pieces. On the other side, we have a large puzzle with 1,000 pieces. Obviously, the bigger puzzle with 1,000 pieces is much more complex. This means the bigger puzzle is also more uncertain; i.e. there are more possible states you have to match each puzzle piece to in order to find the correct fit: With the smaller puzzle you could get 19 configurations wrong before finding the correct fit and with the larger puzzle you could get 999 configurations wrong before finding the right fit. Smaller puzzles have less microstates, larger puzzles have more.

Therefore, the larger puzzle has more informational entropy; uncertainty.

Disorder

Now let’s talk about what entropy isn’t.

You may have heard of entropy described as “disorder.” This old-school analogy is well-intentioned (to educate the public), but it falls on it’s face. Disorder is a poor analogy to describe entropy because entropy is about dispersion and uncertainty, not disorder.

Here’s where it breaks down…

In my puzzle example above, you could scramble the 1,000 piece puzzle into great disorder and still have the exact same number of microstates you had when it was completed and “in order.” Whether or not the puzzle pieces match the “ordered” picture on the box does not change the fact that each piece has a set number of possible states and there is a set number of pieces. Even if you solve the puzzle, each piece has just as many possible states as before you solved it. This means disorder is an inaccurate description of entropy because the amount of disorder does not actually correlate with the amount of entropy.

Make sense?

Perhaps a more fundamental reason you shouldn’t use disorder to measure entropy is disorder doesn’t measure heat. A hot system is not necessarily more or less ordered than a cold system. And heat transfer is a hugely important aspect of why entropy was invented in the first place. Without being able to account for heat transfer, disorder cannot be the same thing as entropy.

Entropy is also not the same thing as “decay” or “messiness” or “disorganization.” These are all just synonyms for “disorder,” which we now know is not a good way to describe entropy.

Cosmic Implications

Now that you understand the basics, we can form some interesting extrapolations about our Universe…

- The Universe is an open system: It has no outside. Therefore, by definition, it has no walls and must be both infinite and open in scope

- Energy can’t be created or destroyed, only transformed or transferred: Our endowment of “stuff” is limited by what we can gather to us; we can’t spontaneously create more of it

Now let’s add what we learned in my relativity primer…

- Nothing massive may exceed c locally

- The Universe is expanding by Hubble’s constant H_0 – beyond a certain distance this rate of expansion is >c

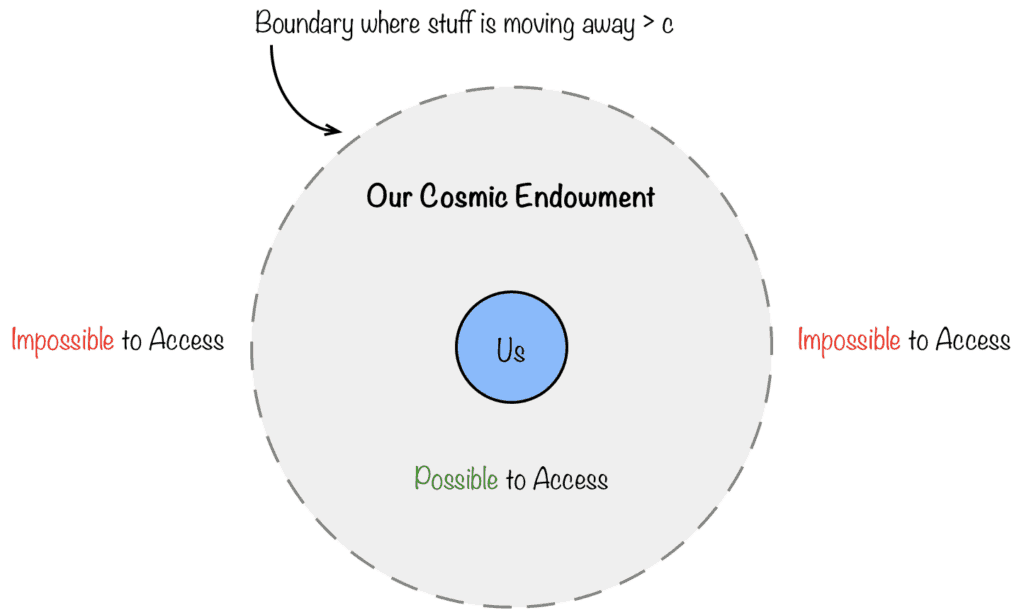

This means our local Universe is actually a closed system. Why? Let’s think through it…

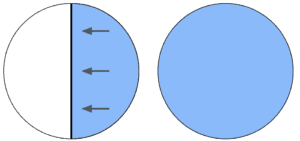

If the Universe is infinitely expansive, energy can always be borrowed from somewhere, no matter what (by definition, you can’t run out of energy if you have an infinite amount of it). However, if the “speed limit” of energy transference is taken into consideration (c) along with Hubble’s constant rate of our expanding Universe H_0, then the amount of energy that could reach a particular location is limited by these factors.

In effect, these limitations create a sphere around any particular location, the boundaries of which divide our available resources and the resources fundamentally unreachable to us because they’re moving away faster than c.

Therefore…

Premise Six: Unless we can achieve FTL, bypass the laws of thermodynamics, or slow down the expansion of our Universe, our species will eventually encounter a physically unavoidable heat death.

Sources: